#include <QLearningEDecreasingPolicy.h>

| QLearningEDecreasingPolicy::QLearningEDecreasingPolicy |

( |

float |

epsilon, |

|

|

float |

decreaseConstant |

|

) |

| |

| void QLearningEDecreasingPolicy::chooseAction |

( |

Action * |

action, |

|

|

const Observation * |

observation |

|

) |

| |

|

virtual |

This method is implemented by subclasses. It chooses an action based on given observation #observation# and puts it in #action#.

Reimplemented from QLearningEGreedyPolicy.

| float QLearningEDecreasingPolicy::getCurrentEpsilon |

( |

| ) |

const |

|

virtual |

Returns the current epsilon value ie. = epsilon / (1 + t * decreaseConstant).

| void QLearningEDecreasingPolicy::init |

( |

| ) |

|

|

virtual |

| float QLearningEDecreasingPolicy::_epsilonDiv |

| float QLearningEDecreasingPolicy::decreaseConstant |

The decrease constant. Value should be >= 0, usually in [0, 1]. The decrease constant is applied in a similar fashion to the one for the stochastic gradient (see NeuralNetwork.h). Here, it is used to slowly decrease the epsilon value, thus allowing the agent to adapt its policy over time from being more exploratory to being more greedy.

The documentation for this class was generated from the following files:

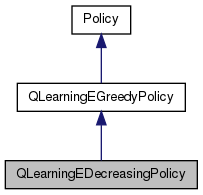

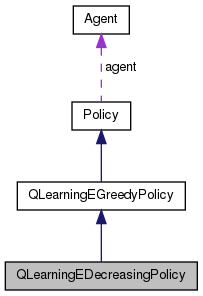

Public Member Functions inherited from QLearningEGreedyPolicy

Public Member Functions inherited from QLearningEGreedyPolicy Public Member Functions inherited from Policy

Public Member Functions inherited from Policy Public Attributes inherited from QLearningEGreedyPolicy

Public Attributes inherited from QLearningEGreedyPolicy Public Attributes inherited from Policy

Public Attributes inherited from Policy 1.8.3.1

1.8.3.1