#include <QLearningSoftmaxPolicy.h>

Public Member Functions | |

| QLearningSoftmaxPolicy (float temperature=1.0, float epsilon=0.0) | |

| virtual | ~QLearningSoftmaxPolicy () |

| virtual void | chooseAction (Action *action, const Observation *observation) |

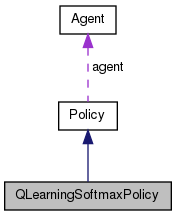

Public Member Functions inherited from Policy Public Member Functions inherited from Policy | |

| Policy () | |

| virtual | ~Policy () |

| virtual void | init () |

| virtual void | setAgent (Agent *agent_) |

Public Attributes | |

| float | temperature |

| float | epsilon |

Public Attributes inherited from Policy Public Attributes inherited from Policy | |

| Agent * | agent |

Detailed Description

Implements the softmax policy. The class contains an optional (epsilon) parameter that behaves in a similar fashion as the -greedy policy, meaning that there is a probability that the action is chosen randomly uniformly accross the action state and a probability of (1-) that it resorts to the softmax policy ie. picks randomly, but this time according to the softmax distribution.

Constructor & Destructor Documentation

| QLearningSoftmaxPolicy::QLearningSoftmaxPolicy | ( | float | temperature = 1.0, |

| float | epsilon = 0.0 |

||

| ) |

|

virtual |

Member Function Documentation

|

virtual |

This method is implemented by subclasses. It chooses an action based on given observation #observation# and puts it in #action#.

Implements Policy.

Member Data Documentation

| float QLearningSoftmaxPolicy::epsilon |

An optional parameter.

- See Also

- QLearningEGreedyPolicy

| float QLearningSoftmaxPolicy::temperature |

The temperature controls the "peakiness" (or "greediness") of the policy. Higher temperature means more peaky/greedy distribution, whereas lower temperatures results in more flat / uniformly distributed choices.

The documentation for this class was generated from the following files:

- src/qualia/rl/QLearningSoftmaxPolicy.h

- src/qualia/rl/QLearningSoftmaxPolicy.cpp

1.8.3.1

1.8.3.1