#include <TDTrainer.h>

Public Member Functions | |

| TDTrainer (QFunction *qFunction, unsigned int observationDim, ActionProperties *actionProperties, float lambda, float gamma, bool offPolicy=false) | |

| virtual | ~TDTrainer () |

| virtual void | init () |

| virtual void | step (const RLObservation *lastObservation, const Action *lastAction, const RLObservation *observation, const Action *action) |

| Performs a training step. More... | |

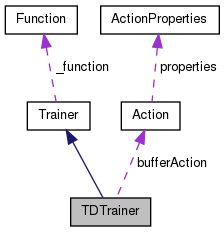

Public Member Functions inherited from Trainer Public Member Functions inherited from Trainer | |

| Trainer (Function *function) | |

| Constructor. More... | |

| virtual | ~Trainer () |

| int | nEpisodes () const |

Public Attributes | |

| real * | eTraces |

| The elligibility traces. More... | |

| float | gamma |

| float | lambda |

| bool | offPolicy |

| Action | bufferAction |

| unsigned int | observationDim |

| unsigned int | actionDim |

Public Attributes inherited from Trainer Public Attributes inherited from Trainer | |

| Function * | _function |

| The function this Trainer is optimizing. More... | |

| int | _nEpisodes |

| The number of episodes this trainer went through (read-only). More... | |

Detailed Description

This class trains a QFunction using the Temporal-Difference (TD-) algorithm.

Constructor & Destructor Documentation

| TDTrainer::TDTrainer | ( | QFunction * | qFunction, |

| unsigned int | observationDim, | ||

| ActionProperties * | actionProperties, | ||

| float | lambda, | ||

| float | gamma, | ||

| bool | offPolicy = false |

||

| ) |

|

virtual |

Member Function Documentation

|

virtual |

Reimplemented from Trainer.

|

virtual |

Performs a training step.

Member Data Documentation

| unsigned int TDTrainer::actionDim |

| Action TDTrainer::bufferAction |

| real* TDTrainer::eTraces |

The elligibility traces.

| float TDTrainer::gamma |

Discounting factor. Value should be in [0, 1], typical value in [0.9, 1). The discount factor determines the importance of future rewards. A factor of 0 will make the agent "opportunistic" by only considering current rewards, while a factor approaching 1 will make it strive for a long-term high reward. If the discount factor meets or exceeds 1, the Q values may diverge. Source: http://en.wikipedia.org/wiki/Q-learning#Discount_factor

| float TDTrainer::lambda |

Trace decay. Value should be in [0, 1], typical value in (0, 0.1]. Heuristic parameter controlling the temporal credit assignment of how an error detected at a given time step feeds back to correct previous estimates. When lambda = 0, no feedback occurs beyond the current time step, while when lambda = 1, the error feeds back without decay arbitrarily far in time. Intermediate values of lambda provide a smooth way to interpolate between these two limiting cases. Source: http://www.research.ibm.com/massive/tdl.html

| unsigned int TDTrainer::observationDim |

| bool TDTrainer::offPolicy |

Controls wether to use the off-policy learning algorithm (Q-Learning) or the on-policy algorithm (Sarsa). Default value: false ie. on-policy (Sarsa) learning NOTE: Off-policy learning should be used at all time when training on a pre-generated dataset. When the agent is trained online (eg. in real time) the on-policy algorithm will result in the agent showing a better online performance at the expense of finding a sub-optimal solution. On the opposite, the off-policy strategy will converge to the optimal solution but will usually show a lower online performance as it will more often make mistakes.

The documentation for this class was generated from the following files:

- src/qualia/rl/TDTrainer.h

- src/qualia/rl/TDTrainer.cpp

1.8.3.1

1.8.3.1