#include <NeuralNetwork.h>

|

| | NeuralNetwork (unsigned int nInputs, unsigned int nHiddens, unsigned int nOutputs, float learningRate=0.01, float decreaseConstant=0, float weightDecay=0, bool linearOutput=false) |

| |

| virtual | ~NeuralNetwork () |

| |

| virtual void | init () |

| | Initializes the network (resets the weights, among other things). More...

|

| |

| virtual unsigned int | nInputs () const |

| | Returns the number of inputs. More...

|

| |

| virtual unsigned int | nHidden () const |

| | Returns the number of hidden neurons. More...

|

| |

| virtual unsigned int | nOutputs () const |

| | Returns the number of outputs. More...

|

| |

| virtual unsigned int | nParams () const |

| | Returns the number of parameters. More...

|

| |

| virtual float | getCurrentLearningRate () const |

| | Returns the current effective learning rate (= learningRate / (1 + t * decreaseConstant). More...

|

| |

| virtual void | setInput (int i, real x) |

| | Sets input i to value x. More...

|

| |

| virtual void | setInputs (const real *inputs) |

| | Sets the value of the inputs. More...

|

| |

| virtual real | getOutput (int i) const |

| | Get output i. More...

|

| |

| virtual void | getOutputs (real *outputs) const |

| | Get the value of the outputs. More...

|

| |

| virtual void | backpropagate (real *outputError) |

| | Backpropagates the error, updating the derivatives. More...

|

| |

| virtual void | propagate () |

| | Propagates inputs to outputs. More...

|

| |

| virtual void | update () |

| | Updates the weights according to the derivatives. More...

|

| |

| virtual void | save (XFile *file) |

| | Saves the model to a file. More...

|

| |

| virtual void | load (XFile *file) |

| | Loads the model from a file. More...

|

| |

| void | _allocateLayer (Layer &layer, unsigned int nInputs, unsigned int nOutputs, unsigned int &k, bool isLinear=false) |

| |

| void | _deallocateLayer (Layer &layer) |

| |

| void | _propagateLayer (Layer &lower, Layer &upper) |

| |

| void | _backpropagateLayer (Layer &upper, Layer &lower) |

| |

| void | _deallocate () |

| |

| | GradientFunction () |

| |

| virtual | ~GradientFunction () |

| |

| virtual void | clearDelta () |

| | Clears the derivatives. More...

|

| |

| | Function () |

| |

| virtual | ~Function () |

| |

| NeuralNetwork::NeuralNetwork |

( |

unsigned int |

nInputs, |

|

|

unsigned int |

nHiddens, |

|

|

unsigned int |

nOutputs, |

|

|

float |

learningRate = 0.01, |

|

|

float |

decreaseConstant = 0, |

|

|

float |

weightDecay = 0, |

|

|

bool |

linearOutput = false |

|

) |

| |

| NeuralNetwork::~NeuralNetwork |

( |

| ) |

|

|

virtual |

| void NeuralNetwork::_allocateLayer |

( |

Layer & |

layer, |

|

|

unsigned int |

nInputs, |

|

|

unsigned int |

nOutputs, |

|

|

unsigned int & |

k, |

|

|

bool |

isLinear = false |

|

) |

| |

| void NeuralNetwork::_backpropagateLayer |

( |

Layer & |

upper, |

|

|

Layer & |

lower |

|

) |

| |

| void NeuralNetwork::_deallocate |

( |

| ) |

|

| void NeuralNetwork::_deallocateLayer |

( |

Layer & |

layer | ) |

|

| void NeuralNetwork::_propagateLayer |

( |

Layer & |

lower, |

|

|

Layer & |

upper |

|

) |

| |

| void NeuralNetwork::backpropagate |

( |

real * |

outputError | ) |

|

|

virtual |

Backpropagates the error, updating the derivatives.

Implements GradientFunction.

| float NeuralNetwork::getCurrentLearningRate |

( |

| ) |

const |

|

virtual |

Returns the current effective learning rate (= learningRate / (1 + t * decreaseConstant).

| real NeuralNetwork::getOutput |

( |

int |

i | ) |

const |

|

virtual |

| void NeuralNetwork::getOutputs |

( |

real * |

output | ) |

const |

|

virtual |

Get the value of the outputs.

Reimplemented from Function.

| void NeuralNetwork::init |

( |

| ) |

|

|

virtual |

Initializes the network (resets the weights, among other things).

Reimplemented from Function.

| void NeuralNetwork::load |

( |

XFile * |

file | ) |

|

|

virtual |

| virtual unsigned int NeuralNetwork::nHidden |

( |

| ) |

const |

|

inlinevirtual |

Returns the number of hidden neurons.

| virtual unsigned int NeuralNetwork::nInputs |

( |

| ) |

const |

|

inlinevirtual |

Returns the number of inputs.

Implements Function.

| virtual unsigned int NeuralNetwork::nOutputs |

( |

| ) |

const |

|

inlinevirtual |

Returns the number of outputs.

Implements Function.

| virtual unsigned int NeuralNetwork::nParams |

( |

| ) |

const |

|

inlinevirtual |

| void NeuralNetwork::propagate |

( |

| ) |

|

|

virtual |

Propagates inputs to outputs.

Implements Function.

| void NeuralNetwork::save |

( |

XFile * |

file | ) |

|

|

virtual |

| void NeuralNetwork::setInput |

( |

int |

i, |

|

|

real |

x |

|

) |

| |

|

virtual |

Sets input i to value x.

Implements Function.

| void NeuralNetwork::setInputs |

( |

const real * |

input | ) |

|

|

virtual |

Sets the value of the inputs.

Reimplemented from Function.

| void NeuralNetwork::update |

( |

| ) |

|

|

virtual |

| float NeuralNetwork::_learningRateDiv |

This value is used to keep track of the learning rate divider: it is equal to (1 + t * decreaseConstant). It is more efficient than the usual way of updating the learning rate, because it requires only one floating point addition per iteration, instead of an addition and a multiplication.

| unsigned int NeuralNetwork::_nParams |

| float NeuralNetwork::decreaseConstant |

The learning rate decrease constant. Value should be >= 0, usually in [0, 1]. The decrease constant is applied as a way to slowly decrease the learning rate during gradient descent to help convergence to a better minimum.

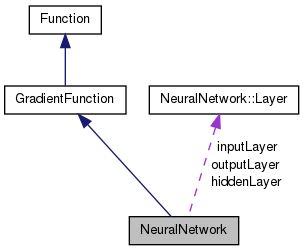

| Layer NeuralNetwork::hiddenLayer |

| Layer NeuralNetwork::inputLayer |

The three MLP layers (inputs -> hidden -> outputs).

| float NeuralNetwork::learningRate |

The starting learning rate. Value should be >= 0, usually in [0, 1]. The learning rate is used to adjust the speed of training. The higher the learning rate the faster the network is trained. However, the network has a better chance of being trained to a local minimum solution. A local minimum is a point at which the network stabilizes on a solution which is not the most optimal global solution. In the case of reinforcement learning, the learning rate determines to what extent the newly acquired information will override the old information. A factor of 0 will make the agent not learn anything, while a factor of 1 would make the agent consider only the most recent information. Source: http://pages.cs.wisc.edu/~bolo/shipyard/neural/tort.html http://en.wikipedia.org/wiki/Q-learning#Learning_rate

| Layer NeuralNetwork::outputLayer |

| float NeuralNetwork::weightDecay |

The weight decay. Value should be >= 0, usually in [0, 1]. Weight decay is a simple regularization method that limits the number of free parameters in the model so as to prevent over-fitting (in other words, to get a better generalization). In practice, it penalizes large weights and thus also limits the freedom in the model.

The documentation for this class was generated from the following files:

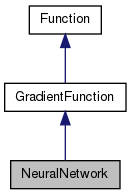

Public Member Functions inherited from GradientFunction

Public Member Functions inherited from GradientFunction Public Member Functions inherited from Function

Public Member Functions inherited from Function Public Attributes inherited from GradientFunction

Public Attributes inherited from GradientFunction 1.8.3.1

1.8.3.1